Thank you Dave!

I’ve only recently had a chance to evaluate this:

First, Just wanted to note that agent conditions seems to break this:

Since agent conditions are probably evaluated after the stage’s agent requirements had sent the build to the agent matching the shared resource lock expression - if the matching agent has a condition on it that prevents this build from entering - the build will wait forever and not be sent to one of the other free agents that don’t have this condition.

Other than that - It seems to work as expected in case I only use $Agent.Hostname$;

Not sure how to utilize this for our more complex shared resource locking requirement.

I’ll try to better explain my desire, let me know if you think this is possible to achieve?

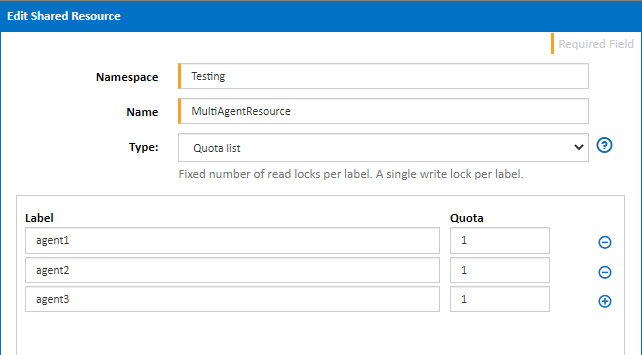

Say I have 3 agents: agent1,agent2,agent3

And I have 3 build configurations: C1,C2,C3

I’d like to enforce the following:

- Only 1 build from a specific configuration name is allowed to run on a specific agent.

So 1 agent can run C1,C2,C3 simultaneously but can’t accept another C1 while there’s a C1 running on it already - the new C1 will be routed to a different agent that’s not currently running a C1, and the same goes for all other configurations.

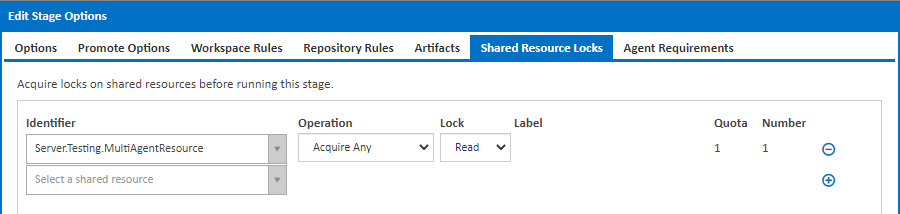

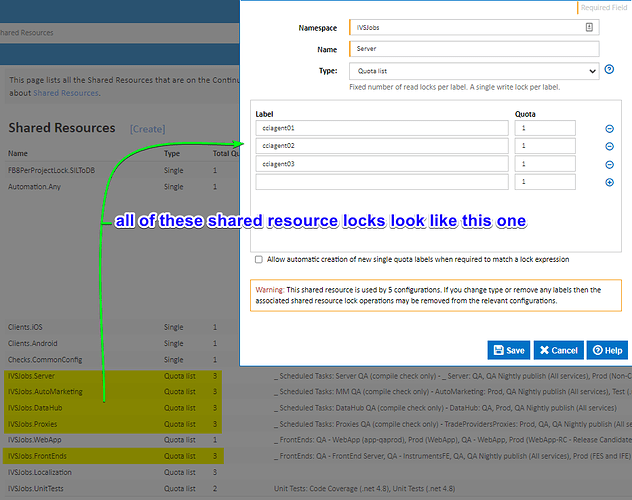

So far I’ve successfully utilized your agent requirement expression solution and it works as expected -

But I can’t figure out exactly how to add configuration lock that will allow different configurations to run simultaneously on the same agent and on the other hand prevent any specific configuration from instantiating more than 1 build on the same agent.

I don’t know if it matters - but I’m using shared resource locks in the configuration scope; not the stage scope, as our configurations are mostly just 1 stage each.

(The heavy lifting is done via FinalBuilder project that’s being run from the 1 stage)

=====

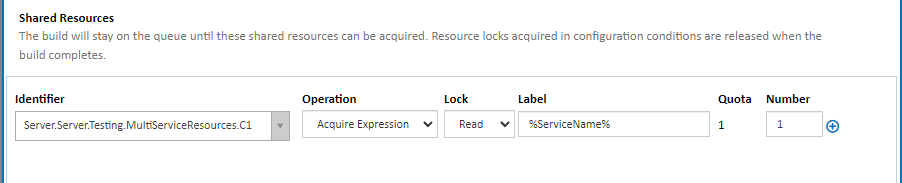

BTW, The next requirement / wish after that  is to also have the ability to lock based on the services that are being published by these configurations:

is to also have the ability to lock based on the services that are being published by these configurations:

Each of the C1,C2,C3 configurations - when run - compiles and deploys 1 or more services from its services list.

(User selects which services to build and deploy in the queue options variable input form):

C1 can compile and deploy any of: C1_Svc1,C1_Svc2,C1_Svc3

C2 can compile and deploy any of: C2_Svc1,C2_Svc2,C2_Svc3

C3 can compile and deploy any of: C3_Svc1,C3_Svc2,C3_Svc3

So in addition to the previous requirement (distributing the builds for each configuration on all agents)

I’d like to handle situations like this one:

User A triggers configuration C1 and selects to deploy C1_Svc2 → runs on agent2 for example

User B triggers configuration C1 and selects to deploy C1_Svc2 → runs on agent3 for example

If we find a solution for the first requirement (agent + configuration lock) - then these 2 builds will run simultaneously on 2 different agents - but since its the same service that’s being deployed to our (QA) environment - they will attempt to deploy to the same destination VM at the same time, so I’d like to know if I can prevent this somehow.