I recently changed CI setup, so that all configurations/stages can be run on every agent. Before tests were run on thinner clients, so there was a context switch.

I also have a condition that every configuration keeps on running on the same agent, to omit workspace copy.

But this issue happens also in one stage configuration.

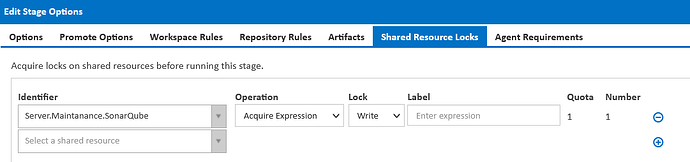

It seems that it might be that there are context/agents switch, and some 2 stages might be using the same shared lock and creates a deadlock ?

Or there is something wrong with the stage queueing, looks like 2 same stages are queued ?

Can you advise on how to debug this ?

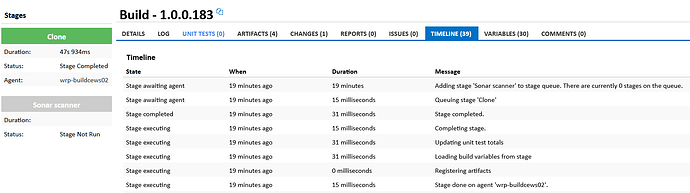

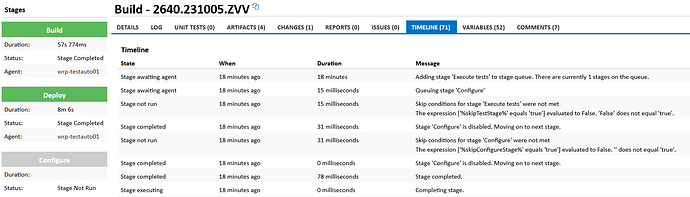

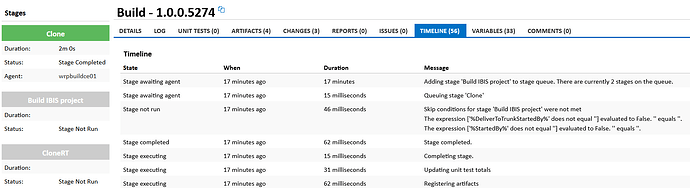

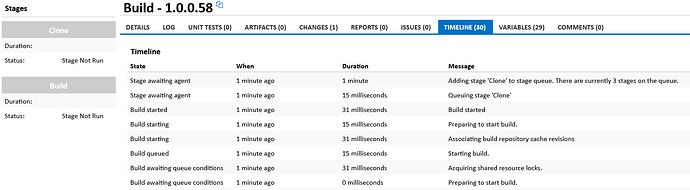

Below there are two examples:

| Build stopped by user | 1 hour, 13 minutes ago | 0 milliseconds | Build stopped by user ‘------------’ |

|---|---|---|---|

| Build stopping | 1 hour, 13 minutes ago | 484 milliseconds | Stopping build. |

| Stage initialising | 1 hour, 13 minutes ago | 156 milliseconds | Updating unit test totals |

| Stage initialising | 1 hour, 13 minutes ago | 15 milliseconds | Sending stage ‘Test GUI’ to agent ‘wrpbuildce02’ |

| Build stopping | 1 hour, 13 minutes ago | 15 milliseconds | Build stopping |

| Stage initialising | 1 hour, 13 minutes ago | 421 milliseconds | Initialising stage… |

| Stage ready | 1 hour, 13 minutes ago | 46 milliseconds | Agent ‘wrpbuildce02’ reserved and license allocated for stage ‘Test GUI’ |

| Stage awaiting agent | 1 hour, 13 minutes ago | 31 milliseconds | Agent shared resources acquired for agent ‘wrpbuildce02’: Stage has acquired the following shared resource locks from agent ‘wrpbuildce02’: a read lock on agent shared resource ‘Agent.GUITests.InProgress’ with single lock quota for current agent |

| Stage awaiting agent | 1 hour, 13 minutes ago | 281 milliseconds | Reserving agent for stage ‘Test GUI’ which is at position 7 in the stage queue. |

| Stage awaiting agent | 1 hour, 13 minutes ago | 865 milliseconds | Suitable agent conditions met, checking 7 stages which are higher in stage queue. |

| Stage awaiting agent | Yesterday at 22:00 | 10 hours, 43 minutes | Adding stage ‘Test GUI’ to stage queue. There are currently 5 stages on the queue. |

| Build stopped by user | 1 hour, 22 minutes ago | 0 milliseconds | Build stopped by user ‘-------’ |

|---|---|---|---|

| Build stopping | 1 hour, 22 minutes ago | 2 seconds | Build stopping |

| Stage awaiting agent | 1 hour, 22 minutes ago | 8 seconds | No agents are currently available to execute the stage. wrp-buildcews02: The expression [‘$Agent.Hostname.ToLower()$’ equals ‘$Build.Stages.First().AgentName.ToLower()$’] evaluated to False. ‘wrp-buildcews02’ does not equal ‘wrp-buildcews01’. This may be because a required tool is not installed on the agent. wrp-testauto01: The expression [‘$Agent.Hostname.ToLower()$’ equals ‘$Build.Stages.First().AgentName.ToLower()$’] evaluated to False. ‘wrp-testauto01’ does not equal ‘wrp-buildcews01’. This may be because a required tool is not installed on the agent. wrp-testauto02: The expression [‘$Agent.Hostname.ToLower()$’ equals ‘$Build.Stages.First().AgentName.ToLower()$’] evaluated to False. ‘wrp-testauto02’ does not equal ‘wrp-buildcews01’. This may be because a required tool is not installed on the agent. wrp-testauto03: The expression [‘$Agent.Hostname.ToLower()$’ equals ‘$Build.Stages.First().AgentName.ToLower()$’] evaluated to False. ‘wrp-testauto03’ does not equal ‘wrp-buildcews01’. This may be because a required tool is not installed on the agent. wrpbuildce01: The expression [‘$Agent.Hostname.ToLower()$’ equals ‘$Build.Stages.First().AgentName.ToLower()$’] evaluated to False. ‘wrpbuildce01’ does not equal ‘wrp-buildcews01’. This may be because a required tool is not installed on the agent. wrpbuildce02: The expression [‘$Agent.Hostname.ToLower()$’ equals ‘$Build.Stages.First().AgentName.ToLower()$’] evaluated to False. ‘wrpbuildce02’ does not equal ‘wrp-buildcews01’. This may be because a required tool is not installed on the agent. wrpbuildce03: The expression [‘$Agent.Hostname.ToLower()$’ equals ‘$Build.Stages.First().AgentName.ToLower()$’] evaluated to False. ‘wrpbuildce03’ does not equal ‘wrp-buildcews01’. This may be because a required tool is not installed on the agent. wrpbuildcesrv01: The expression [‘$Agent.Hostname.ToLower()$’ equals ‘$Build.Stages.First().AgentName.ToLower()$’] evaluated to False. ‘wrpbuildcesrv01’ does not equal ‘wrp-buildcews01’. This may be because a required tool is not installed on the agent. wrpbuildcesrv01: The expression [‘$Agent.MSBuild.16.0.PathX86$’ exists] evaluated False. ‘$Agent.MSBuild.16.0.PathX86$’ does not exist. This may be because a required tool is not installed on the agent. Agent: wrp-buildcews01 is currently executing its maximum number of concurrent build stages: 1 |

| Stage awaiting agent | Yesterday at 20:01 | 12 hours, 42 minutes | Adding stage ‘Build IBIS project’ to stage queue. There are currently 1 stages on the queue. |

| Stage awaiting agent | Yesterday at 20:01 | 31 milliseconds | Queuing stage ‘Clone’ |

| Stage not run | Yesterday at 20:01 | 15 milliseconds | Skip conditions for stage ‘Build IBIS project’ were not met |

| The expression [‘%DeliverToTrunkStartedBy%’ does not equal ‘’] evaluated to False. ‘’ equals ‘’. | |||

| The expression [‘%StartedBy%’ does not equal ‘’] evaluated to False. ‘’ equals ‘’. | |||

| Stage completed | Yesterday at 20:01 | 46 milliseconds | Stage completed. |

| Stage executing | Yesterday at 20:01 | 15 milliseconds | Completing stage. |

| Stage executing | Yesterday at 20:01 | 46 milliseconds | Updating unit test totals |

| Stage executing | Yesterday at 20:01 | 31 milliseconds | Registering artifacts |

| Stage executing | Yesterday at 20:01 | 0 milliseconds | Loading build variables from stage |

| Stage executing | Yesterday at 20:01 | 15 milliseconds | Stage done on agent ‘wrp-buildcews01’. |

| Stage executing | Yesterday at 20:01 | 1 second | Syncing workspace from agent ‘wrp-buildcews01’ to server |

| Stage executing | Yesterday at 20:01 | 15 milliseconds | Creating archive files in workspace of agent ‘wrp-buildcews01’ ready to copy to server |

| Stage executing | Yesterday at 20:00 | 1 minute | Executing stage on agent ‘wrp-buildcews01’ |

| Stage initialising | Yesterday at 20:00 | 1 second | Initialising workspace on agent ‘wrp-buildcews01’ |

| Stage initialising | Yesterday at 20:00 | 62 milliseconds | Sending stage ‘Clone’ to agent ‘wrp-buildcews01’ |

| Stage initialising | Yesterday at 20:00 | 771 milliseconds | Initialising stage… |

| Stage ready | Yesterday at 20:00 | 78 milliseconds | Agent ‘wrp-buildcews01’ reserved and license allocated for stage ‘Clone’ |

| Stage awaiting agent | Yesterday at 20:00 | 15 milliseconds | Agent shared resources acquired for agent ‘wrp-buildcews01’: Stage has acquired the following shared resource locks from agent ‘wrp-buildcews01’: a read lock on agent shared resource ‘Agent.AgentRepoStore.Clone’ with single lock quota for current agent |

| Stage awaiting agent | Yesterday at 20:00 | 124 milliseconds | Reserving agent ‘wrp-buildcews01’ for stage ‘Clone’ which is at position 1 in the stage queue. |

| Stage awaiting agent | Yesterday at 20:00 | 322 milliseconds | Adding stage ‘Clone’ to stage queue. There are currently 0 stages on the queue. |